Extreme Light

What can you do with a camera that is so fast that it can freeze light in motion?

This the focus of Professor Daniele Faccio and his Extreme Light group, based in the School of Physics and Astronomy.

The speed of this camera gives it a crucial advantage over conventional cameras. It is so fast that it can record ‘when’ light arrives at its sensor, not just ‘where’. With this timing information, the camera can make images in very unconventional ways. No longer does an object have to be in the field of view to be in the picture. This camera can see an object hidden around a corner or make an image of something embedded within or behind a wall.

“A few years ago, looking around corners or behind walls with a camera just sounded bonkers. Now we have a system in the lab that can give you full-colour 3D imaging behind a corner.” says Professor Faccio.

Imaging around corners will make it possible for driver-assisted or autonomous vehicles to anticipate unseen dangers or drive through heavy fog. Search and rescue missions can assess risk or locate trapped people before entering dangerous structures.

Light, of course, is the fundamental component of imaging. The exposure of light to a photosensitive material or sensor creates an image. Light is made up of particles known as photons. These photons propagate through a vacuum at just under 300 million metres per second.

The ability to capture light in flight relies on cameras that have a frame rate of a trillion frames-per-second. It also relies on computational techniques that can reconstruct meaningful images from the collection of an extremely small amount of light.

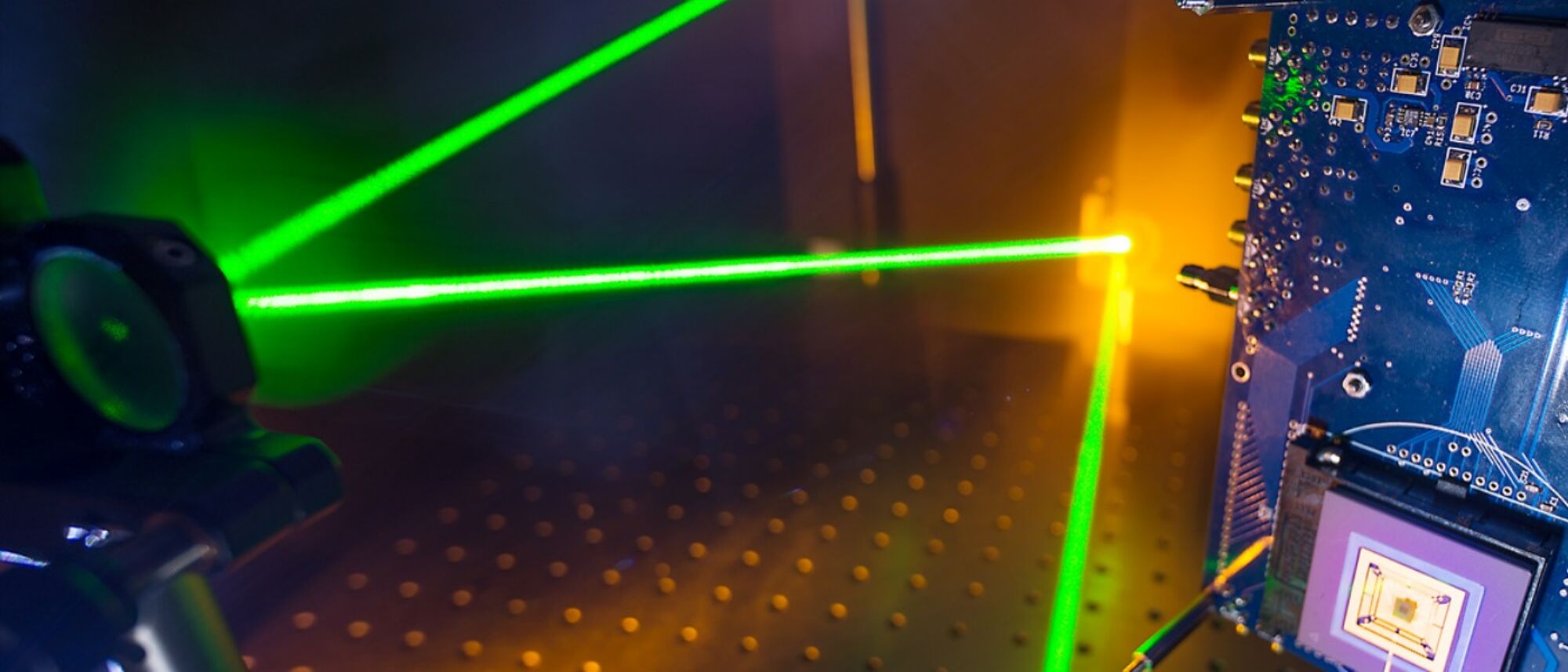

This is because these technologies rely on detectors that collect a single photon at a time. This technique is called Time Correlated Single Photon Counting. An initial laser pulse sets the timer going on the camera, when resultant photons reach the sensor, the time they took to get there is recorded.

Light in flight makes imaging around corners possible. A pulse of laser light bounces off a scattering wall, spreads out, then strikes a hidden target object around a corner. The detector captures some of the photons returning from this object to the scattering wall. Advanced computational techniques are then used to determine an image from this pattern of photons. This process mirrors the principles of sonar or echo location.

The camera collects the light, the computer makes the image

The combination of using camera and computation to make an image has parallels with our own way of seeing. Our eyes collect light which become nerve signals which our brain interprets into an image. In this sense, a mirage is just our brain applying the wrong computational technique to the light collected by the eye.

“The standard way of thinking of an image is you've got a grid of pixels and they give you an image.” explains Professor Faccio.

“For a computer, an image is just a string of numbers. When you start thinking like that – because you need to if you want to make something new – it's not important where the string of numbers comes from. With a single photon detector, it's only telling us when the photons arrive. It has no spatial information. But with the right sort of computational techniques you can still pull an image out of that.”

Conventional digital cameras evolved over recent years by adding more capacity to the imaging sensor – you were getting more and more megapixels. This is now changing as new types of cameras combine advanced computational techniques with a variety of imaging sensors. Now fewer and fewer pixels are needed, as machine learning and artificial intelligence can construct images out of this more restricted but more varied data, even to the ultimate point of single pixel and single photon imaging.

Cameras for watching you think

The light-in-flight technique can also be applied to imaging through opaque objects, such as walls. As the human body is a wall – at least to a physicist – this has huge potential for medical imaging. While there are limitless possible applications for cheap, portable imaging within the human body, Prof Faccio sees exciting potential in the area of brain imaging.

“Where's the edge of science and our knowledge at the moment? Where is it that we're blocked and we don't know where to go? We’re at a complete loss with the human brain. We don't really know how it works. That's the reality. I find that fascinating.” says Professor Faccio.

His research aims to use the techniques of light-in-flight imaging to demonstrate how a small portable device could image deep brain blood oxygenation. This is currently possible in functional magnetic resonance imaging (fMRI), but a cheap and wearable device that could perform this function might enable a paradigm shift in the science of understanding the brain.

When you think, your neurons need energy. They get that energy by extracting it from an increased blood flow. When you're thinking, you're getting the energy to do that by attracting blood or sucking in blood that's got a high oxygenation level.

If you’re able to see these changes in oxygenation level, it’s possible to map this to what the subject is thinking at the time. These patterns of blood oxygenation have the potential to become a visual language of a person’s thoughts. Using machine learning, you can then train a computer to make associations between blood oxygenation and thoughts.

“Can we build a device to read thoughts? When I'm thinking something or seeing something, can I read that thought from the human brain signals my imaging device is recording?” asks Professor Faccio.

“We could give this area of research its enabling technology. A lesson learnt in science is that major breakthroughs follow a new ground-breaking technology. Can I make a hat that can brain read? I don't know the answer to that, but it’s a really exciting thought.

“There's also a very interesting responsible research and innovation aspect here too. I don't think people are ready for hats that can read your brain.

“How do you share the information that you're reading off? How does that modify your interaction with other people? There's lots of bad things you could do with this kind of technology. Are those going to override the good things? It's not clear, but it's something that we need to start talking about. The general feeling is that in the future – not too far away in the future – this is going to happen, whether we like it or not.”

How does imaging through objects work?

The process uses a laser pulse on the object. Then, a single photon camera with the ability to image at a trillion frames per second is used to capture the very low light levels that get through the object.

Crucially, it relies on the ability of the camera to give a very precise timing for the arriving photons. You not only have information of where the light is arriving on the other side but most importantly when it arrives. Computational techniques can then unravel the image using that information.

“We haven't started working on the brain yet. At the moment we can see through five centimetres of completely opaque material. We can see objects that are embedded inside there, so we're making the first progress.” says Professor Faccio.

“When you go through a diffusive material such as the human brain or an opaque wall, light is propagating through the object. Think of heat: if you take a metal bar and you heat it at one end, it will slowly, gradually, start to heat up as the heat moves along the bar. Essentially the heat is diffusing.

“Light in an opaque material does the same thing. It doesn't go in straight lines anymore. The equations for light are exactly the same as those heat propagation equations. The light is diffusing outwards in all directions.

“You can imagine the light as it is propagating. The photons hit your target object and then diffuse around it and come out again. When you capture the light on the other side of the object, you don't actually see any direct evidence that the object was there. Using your ‘trillion frames per second’ camera, you can measure the timing information of how long the photons took to arrive.

“I can see this. If I take the object out, I'll have a lot more photons that arrived earlier. If I put the object in, there are a lot more photons that arrived later.

“By combining the spatial and timing information with some advanced computational processing, you can reconstruct the shape of the object that gave you this timing pattern.”

The Research Hub

The Extreme Light group will be setting up a new lab within Glasgow’s new £112 million ARC. It will be part of the Quantum and Nanotechnology space within this new facility. This will enable the group to work closely with other Glasgow researchers focused on quantum technologies for imaging

Beyond these direct relationships, the Research Hub is designed to support collaboration on big ideas across broader areas of research.

“The aim is to bring together world-leading researchers that have these big overarching ideas that can span across disciplines. It's a very exciting opportunity, not just for the academics but also for the students and the post-docs. I'm hoping an intellectually vibrant community will form here.” says Professor Faccio.

“One area we see potential for breakthroughs in the Research Hub, again, is the brain. Can we use optical networks to build artificial neural networks, in other words, something like an artificial brain? Can we add some quantum into this idea, to make a quantum brain?”

Professor Faccio also sees the space creating potential for inspiring new ideas and new research.

“I believe that the most successful things are the ones that evolve, not forcefully but somehow emerge from an evolution process."

“Hopefully, this is what we’ll get from these very different areas being together. Someone from chemistry or biology will come at you with a question and you think: 'oh, but surely that's not possible?’ Then: ‘oh, but maybe it is?’ You start asking questions that haven't been asked before. This is what's really exciting about the Research Hub.”