Emulation & uncertainty quantification

We develop emulation methods and computationally tractable statistical models of complex processes such as mathematical models and computational digital twins.

Staff

Postgraduate research students

Refine By

-

{{student.surname}} {{student.forename}}

{{student.surname}} {{student.forename}}

({{student.subject}})

{{student.title}}

Emulation and Uncertainty Quantification - Example Research Projects

Information about postgraduate research opportunities and how to apply can be found on the Postgraduate Research Study page. Below is a selection of projects that could be undertaken with our group.

Exploring Hybrid Flood modelling leveraging GPU/Exascale computing (PhD)

Supervisors: Andrew Elliott, Lindsay Beevers (University of Edinburgh), Claire Miller, Michele Weiland (University of Edinburgh)

Relevant research groups: Modelling in Space and Time, Environmental, Ecological Sciences & Sustainability, Machine Learning and AI, Emulation and Uncertainty Quantification

Funding: This project is competitively funded through the ExaGEO DLA.

Flood modelling is crucial for understanding flood hazards, now and in the future as a result of climate change. Modelling provides inundation extents (or flood footprints) which provide outlines of areas at risk which can help to manage our increasingly complex infrastructure network as our climate changes. Our ability to make fast, accurate predictions of fluvial inundation extents is important for disaster risk reduction. Simultaneously capturing uncertainty in forecasts or predictions is essential for efficient planning and design. Both aims require methods which are computationally efficient whilst maintaining accurate predictions. Current Navier-stokes physics-based models are computationally intensive; thus this project would explore approaches to hybrid flood models which utilise GPU-compute and ML fused with physics-based models, as well as investigating scaling the numerical models to large-scale HPC resources.

Scalable approaches to mathematical modelling and uncertainty quantification in heterogeneous peatlands (PhD)

Supervisors: Raimondo Penta, Vinny Davies, Jessica Davies (Lancaster University), Lawrence Bull, Matteo Icardi (University of Nottingham)

Relevant research groups: Modelling in Space and Time, Environmental, Ecological Sciences & Sustainability, Machine Learning and AI, Emulation and Uncertainty Quantification, Continuum Mechanics

Funding: This project is competitively funded through the ExaGEO DLA.

While only covering 3% of the Earth’s surface, peatlands store >30% of terrestrial carbon and play a vital ecological role. Peatlands are, however, highly sensitive to climate change and human pressures, and therefore understanding and restoring them is crucial for climate action. Multiscale mathematical models can represent the complex microstructures and interactions that control peatland dynamics but are limited by their computational demands. GPU and Exascale computing advances offer a timely opportunity to unlock the potential benefits of mathematically-led peatland modelling approaches. By scaling these complex models to run on new architectures or by directly incorporating mathematical constraints into GPU-based deep learning approaches, scalable computing will to deliver transformative insights into peatland dynamics and their restoration, supporting global climate efforts.

Scalable Inference and Uncertainty Quantification for Ecosystem Modelling (PhD)

Supervisors: Vinny Davies, Richard Reeve (BOHVM, UoG), David Johnson (Lancaster University), Christina Cobbold, Neil Brummitt (Natural History Museum)

Relevant research groups: Modelling in Space and Time, Environmental, Ecological Sciences & Sustainability, Machine Learning and AI, Emulation and Uncertainty Quantification

Funding: This project is competitively funded through the ExaGEO DLA.

Understanding the stability of ecosystems and how they are impacted by climate and land use change can allow us to identify sites where biodiversity loss will occur and help to direct policymakers in mitigation efforts. Our current digital twin of plant biodiversity – https://github.com/EcoJulia/EcoSISTEM.jl – provides functionality for simulating species through processes of competition, reproduction, dispersal and death, as well as environmental changes in climate and habitat, but it would benefit from enhancement in several areas. The three this project would most likely target are the introduction of a soil layer (and the improvement of the modelling of soil water); improving the efficiency of the code to handle a more complex model and to allow stochastic and systematic Uncertainty Quantification (UQ); and developing techniques for scalable inference of missing parameters.

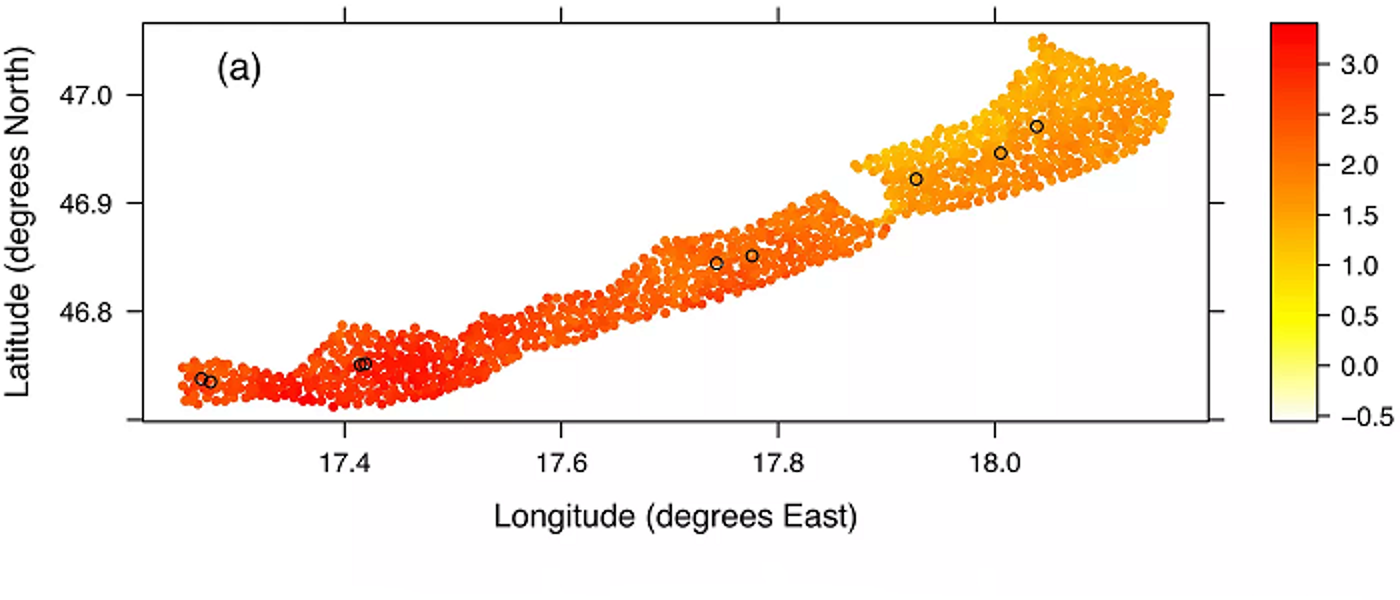

Smart-sensing for systems-level water quality monitoring (PhD)

Supervisors: Craig Wilkie, Lawrence Bull, Claire Miller, Stephen Thackeray (Lancaster University)

Relevant research groups: Machine Learning and AI, Emulation and Uncertainty Quantification, Environmental, Ecological Sciences & Sustainability

Funding: This project is competitively funded through the ExaGEO DLA.

Freshwater systems are vital for sustaining the environment, agriculture, and urban development, yet in the UK, only 33% of rivers and canals meet ‘good ecological status’ (JNCC, 2024). Water monitoring is essential to mitigate the damage caused by pollutants (from agriculture, urban settlements, or waste treatment) and while sensors are increasingly affordable, coverage remains a significant issue. New techniques for edge processing and remote power offer one solution, providing alternative sources of telemetry data. However, methods which combine such information into systems-level sensing for water are not as mature as other applications (e.g., built environment). In response, procedures for computation at the edge, decision-making, and data/model interoperability are considerations of this project.

Statistical Emulation Development for Landscape Evolution Models (PhD)

Supervisors: Benn Macdonald, Mu Niu, Paul Eizenhöfer (GES, UoG), Eky Febrianto (Engineering, UoG)

Relevant research groups: Modelling in Space and Time, Environmental, Ecological Sciences & Sustainability, Machine Learning and AI, Emulation and Uncertainty Quantification

Funding: This project is competitively funded through the ExaGEO DLA.

Many real-world processes, including those governing landscape evolution, can be effectively mathematically described via differential equations. These equations describe how processes, e.g. the physiography of mountainous landscapes, change with respect to other variables, e.g. time and space. Conventional approaches for performing statistical inference involve repeated numerical solving of the equations. Every time parameters of the equations are changed in a statistical optimisation or sampling procedure; the equations need to be re-solved numerically. The associated large computational cost limits advancements when scaling to more complex systems, the application of statistical inference and machine learning approaches, as well as the implementation of more holistic approaches to Earth System science. This yields to the need for an accelerated computing paradigm involving highly parallelised GPUs for the evaluation of the forward problem.

Beyond advanced computing hardware, emulation is becoming a more popular way to tackle this issue. The idea is that first the differential equations are solved as many times as possible and then the output is interpolated using statistical techniques. Then, when inference is carried out, the emulator predictions replace the differential equation solutions. Since prediction from an emulator is very fast, this avoids the computational bottleneck. If the emulator is a good representation of the differential equation output, then parameter inference can be accurate.

The student will begin by working on parallelising the numerical solver of the mathematical model via GPUs. This means that many more solutions can be generated on which to build the emulator, in a timeframe that is feasible. Then, they will develop efficient emulators for complex landscape evolution models, as the PhD project evolves.

Structured ML for physical systems (PhD or MSc)

Supervisors: Lawrence Bull

Relevant research groups: Machine Learning and AI, Emulation and Uncertainty Quantification

When using Machine Learning (ML) for science and engineering, an alternative mindset is required to build sensible representations from data. Unlike other applications (e.g. large language models), the datasets are relatively small and curated - i.e. they are collected via experiments rather than scraped from the internet. The limited variance of training data typically renders learning by ‘brute force’ infeasible. Instead, we must encode domain-specific knowledge within ML algorithms to enforce structure and constrain the space of possible models.

This project covers ML for physical systems (Karniadakis, 2021) and looks to integrate machine learning with applied mathematics - fusing scientific knowledge with insights from data. Methods will investigate various levels of constraints on ML predictions - including smoothness, invariances, etc. Relevant topics include:

- physics-informed machine learning (Girin, 2020)

- scientific machine learning (Pförtner, 2022)

- hybrid modelling

Generating deep fake left ventricles: a step towards personalised heart treatments (PhD)

Supervisors: Andrew Elliott, Vinny Davies, Hao Gao

Relevant research groups: Machine Learning and AI, Emulation and Uncertainty Quantification, Biostatistics, Epidemiology and Health Applications, Imaging, Image Processing and Image Analysis

Personalised medicine is an exciting avenue in the field of cardiac healthcare where an understanding of patient-specific mechanisms can lead to improved treatments (Gao et al., 2017). The use of mathematical models to link the underlying properties of the heart with cardiac imaging offers the possibility of obtaining important parameters of heart function non-invasively (Gao et al., 2015). Unfortunately, current estimation methods rely on complex mathematical forward simulations, resulting in a solution taking hours, a time frame not suitable for real-time treatment decisions. To increase the applicability of these methods, statistical emulation methods have been proposed as an efficient way of estimating the parameters (Davies et al., 2019; Noè et al., 2019). In this approach, simulations of the mathematical model are run in advance and then machine learning based methods are used to estimate the relationship between the cardiac imaging and the parameters of interest. These methods are, however, limited by our ability to understand the how cardiac geometry varies across patients which is in term limited by the amount of data available (Romaszko et al., 2019). In this project we will look at AI based methods for generating fake cardiac geometries which can be used to increase the amount of data (Qiao et al., 2023). We will explore different types of AI generation, including Generative Adversarial Networks or Variational Autoencoders, to understand how we can generate better 3D and 4D models of the fake left ventricles and create an improved emulation strategy that can make use of them.

Seminars

Regular seminars relevant to the group are held as part of the Statistics seminar series. The seminars cover various aspects across the AI3 initiative and usually span multiple groups. You can find more information on the Statistics seminar series page, where you can also subscribe to the seminar series calendar.

Impressive advancements in mathematical modelling and digital twins (computer twins of complex systems) have highlighted a need for properly understanding parameter uncertainty and model calibration. In recent years, the Emulation and Uncertainty Quantification group has developed emulation methods, computationally tractable statistical models of complex processes, which help to infer parameters and uncertainty estimates in complex mathematical models.

The group collaborates heavily with the Continuum Mechanics groups within Applied Mathematics and leads parts of the SoftMech Centre for Healthcare in Mathematics. The group recently won the RSS Mardia prize to host workshops on environmental digital twins as part of the Analytics for Digital Earth project.